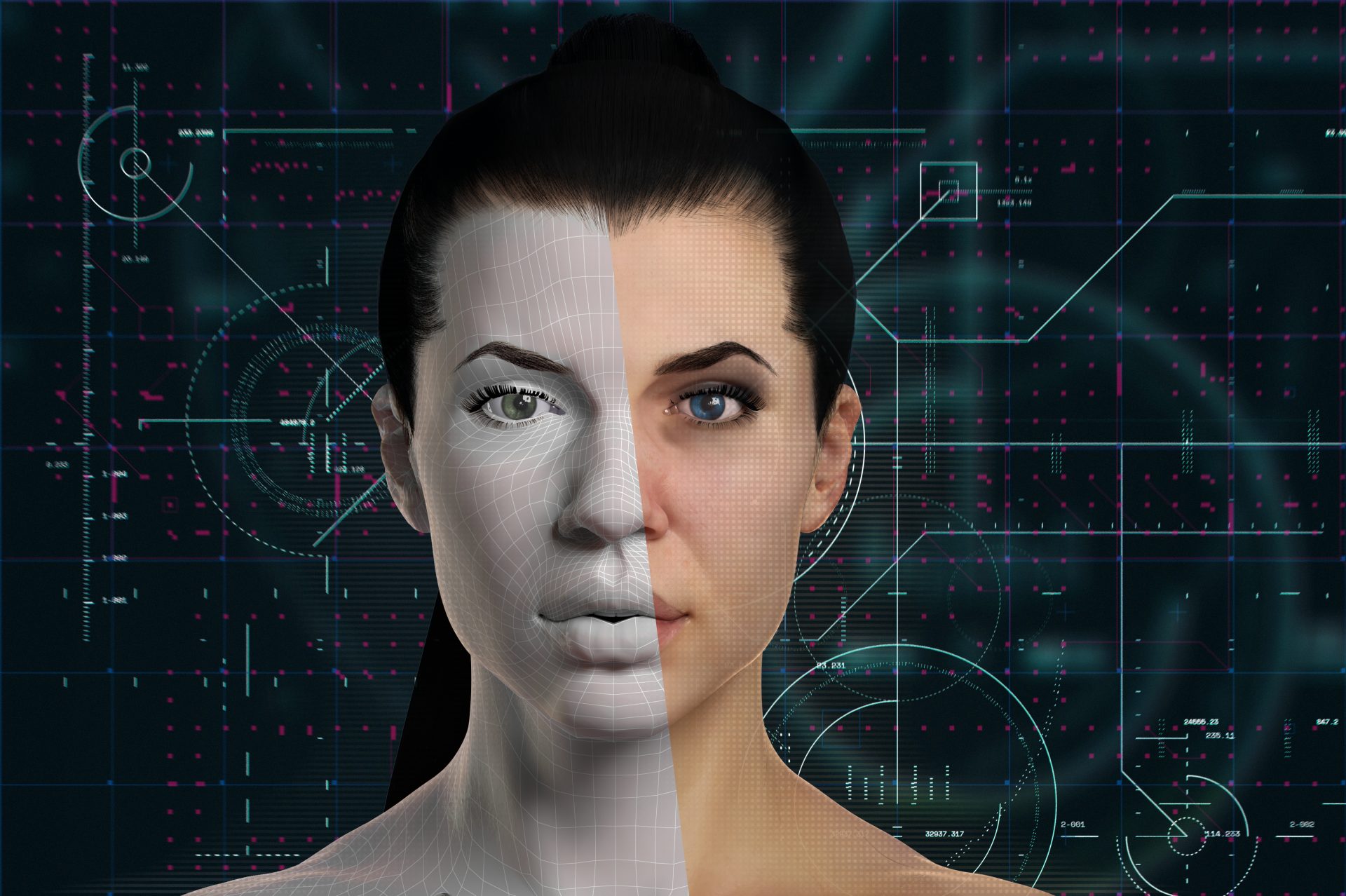

Seeing is believing? Deepfakes and AI-generated content are becoming increasingly good at fooling people into believing that their product is legitimate. Although contents generated using publicly available tools and apps are still easily detected by specialized software, they are quickly catching up with the human ability to distinguish them from genuine material.

Jorge Luis Borges once wrote: “Reality is not always probable, or likely. But if you’re writing a story, you have to make it as plausible as you can because if you do not, the reader’s imagination will reject it”. Borges is possibly the foremost South American writer of the whole 20th century. Sadly (at least for us!), he did not live to see our highly polarized world in which misinformation and “post-factual truth” seem to have invaded our daily routines.

We will never know if he would have appreciated the subtle irony that machines are getting better than humans at deceiving them, making up stories that fool their eyes and minds. In the last few years, their skills have improved so much that technology to produce deepfakes has become mainstream: dozens of specialized apps offer the possibility to falsify images and videos, even using a mobile device. While often results are not impressive, more sophisticated versions of the same software have already been successfully used to create more convincing contents.

Soon, deepfakes could become a very effective weapon in manipulating people’s opinions. Misinformation campaigners would gladly rely on such powerful technology, which is already capable of swapping anyone’s face and voice and even of lip-synching the exact words in a video. It’s pretty easy to see many of the potential criminal uses that could be made of such a technology: from manipulating political consensus to stock market scams and attacks on prominent personalities, the range of opportunities is considerable.

Media authentication, possibly reinforced by blockchain technology, could be an effective countermeasure to this kind of disinformation since it can help identify the origin and the author of the contents. Still, the issue lies with men, and technology is just a tool that can be used either for the good or the bad. Research shows that debunking is not very effective in affecting most viewers’ attitude towards the source of misinformation, so why an institutional system should affect it? Confirmation bias affects us all, regardless of education level.

Hence, source confirmation is only part of the solution. Given the massive amount of content published daily on social media platforms, this approach will always have a limited reach. It could even reinforce the conspiracists’ visions, thus creating further polarization. Furthermore, it could create an insuperable barrier for the plurality of information in authoritarian countries. Especially in the long term, detecting fake contents will probably become a matter of developing a critical sense about what we see and (re)post or (re)tweet.

Unfortunately, this is not a quick process: if the aim is to develop a shared critical sense towards the network and its contents, much work is required to educate the population groups that need it most. Starting with the elderly and all those that are most vulnerable to this type of mystification is crucial to help them develop solid digital skills.

Although technology can be a practical support to obtain the desired result, it is impossible to cure our democracy and society without working on the accessibility and inclusiveness of the tools we use to spread ideas. Just as it happened when writing and printing spread worldwide, limitations and censorship cannot be a definitive solution: people must learn to “read” in a digital environment and distinguish truth from the invention, however plausible it may be.